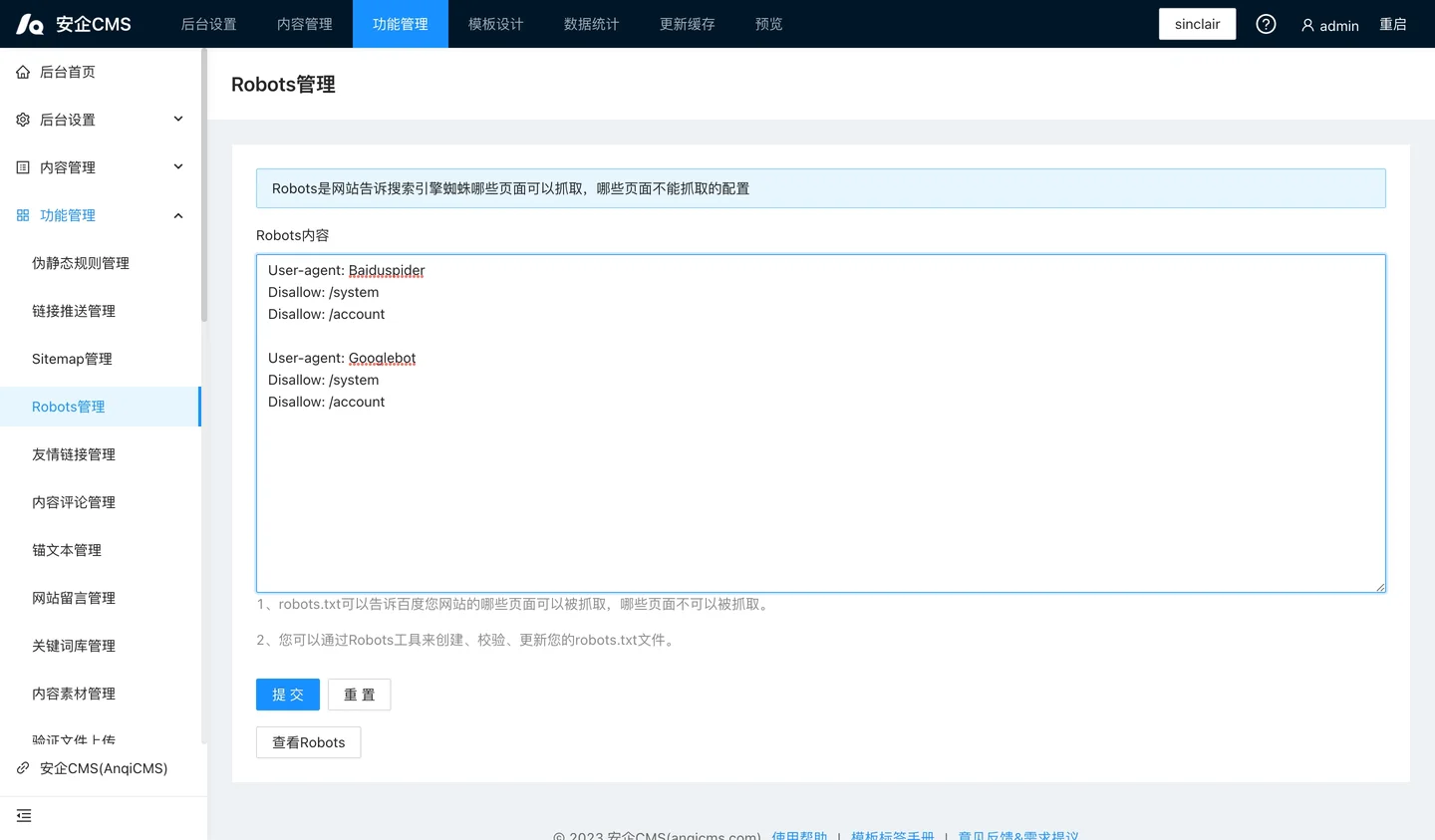

The robots.txt is a search engine crawler protocol file, which serves to inform search engine spiders which pages of your website can be crawled and which pages cannot be crawled.robots.txt is a plain text file that follows the robots exclusion standard, consisting of one or more rules. Each rule can allow or disallow specific search engine spiders from crawling files under designated paths on a website.If you do not set it, all files are allowed to be crawled by default.

The robots.txt rules include:

User-agent: The user agent (UA) identifier, which can be viewed here.

Allow: Allows access to crawling.

Disallow: Prevent access to crawling.

Sitemap: Site map. There is no limit on the number of entries, you can add multiple sitemap links.

Comment line:.

Below is a simple robots.txt file containing two rules:

User-agent: YisouSpider

Disallow: /

User-agent: *

Allow: /

Sitemap: /sitemap.xml

The meaning of 'User-agent: BaiduSpider' is: For the rules targeting the 'YisouSpider' (Yisou search engine crawler), it can also be set to 'bingbot' (Bing), 'Googlebot' (Google), and so on.The proxy names of other search engine crawlers can be viewed here.

The meaning of 'Disallow: /' is 'Prohibit access to crawl all content'.

The meaning of 'User-agent: *' is: a rule for all user agents (where * is a wildcard).All user agents can crawl the entire website.It doesn't matter not to specify this rule, the result is the same; the default behavior is that user agents can crawl the entire website.

The meaning of 'Sitemap:' is 'The path to the sitemap file of the website is:'}

]sitemap.xml.